Changbal Machine Development Plan

Keunsoo Yoon (Independent Researcher)

austiny@snu.ac.kr / austiny@gatech.edu

1. Introduction

This development plan outlines the design and implementation of the 'Changbal Machine,' a novel AI-driven system aimed at reinterpreting NP problems not merely as issues of 'complexity' but as opportunities for 'emergent solvability.' The machine seeks to actively control these emergent transitions, ultimately converting real-world intractable problems into 'solvable states' (∣P⟩). This endeavor will expand existing computational complexity theory, deepen the role of AI, and pioneer a new frontier in interdisciplinary research.

2. Theoretical Background

The core of the Changbal Machine is rooted in the 'Changbal Theory.'

- Changbal Function: The probability of satisfiability Psol(d) for NP problems exhibits a sigmoid-like transition as constraint density d increases, showing abrupt changes within a specific 'Changbal Region.' This function is defined as:

![]()

Here, d represents constraint density, dc is the critical threshold, and a controls the steepness of the transition.

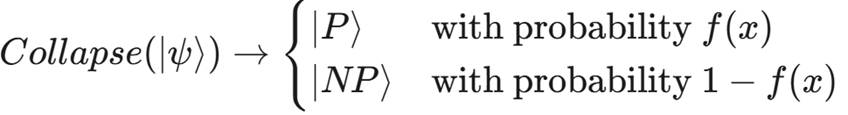

- Superposed State and Emergent Collapse: A problem instance exists in a superposed state (∣ψ⟩=α∣P⟩+β∣NP⟩) between a 'solvable state' (∣P⟩) and an 'unsolvable state' (∣NP⟩). This state collapses into either ∣P⟩ or ∣NP⟩ with a certain probability f(x) upon entering the 'Changbal Region’.

- 'Ordeal Data' and Conditions for Emergence: Emergence is not merely a result of 'a state of much sorrow' but rather, a high probability of revolutionary transition emerges in situations that are "complex, filled with conflict, and unsolvable by conventional methods." This 'ordeal data' serves as an essential precondition for emergence and critical input for inducing the system's 'critical threshold.'

3. Changbal Machine Architecture

The Changbal Machine is structured into three main modules: Data Ingestion and Analysis, Emergence Condition Modeling, and Emergence Control and Prediction.

3.1. Data Ingestion and Analysis Module

- Multilingual/Multi-version Narrative Data: Utilizes vast narrative texts such as the Bible (NIV, KJV, Korean, German versions, etc.), biographies, literary works, and scripts as input data. This ensures data diversity and enhances the AI's generalization capabilities.

- Preprocessing and Embedding: Input texts are transformed into high-dimensional vector embeddings for sentences/paragraphs using Transformer-based language models optimized for each language (e.g., BERT, RoBERTa, KoBERT).

- Transformer-Based Emotion Data Processing (BERT, RoBERTa): The primary reason for adopting Transformer models is their superior ability to capture subtle emotional nuances and contextual meanings with high precision across diverse contexts. Traditional NLP techniques, such as RNNs and LSTMs, have limitations in processing broader context within sentences. However, Transformer models effectively learn interactions between critical words throughout an entire sentence using the self-attention mechanism. Specifically, BERT and RoBERTa, trained on extensive pre-training datasets, leverage linguistic intuitions to represent emotional expressions and narrative structures in biblical texts and diverse multilingual literature with exceptional accuracy. This capability is essential for the Changbal Machine to intricately understand realistic narrative flows and human emotions.

Key Justifications:

- Capability to capture fine emotional nuances through self-attention mechanisms, considering the entire context of sentences.

- Multilingual support enabling integrated processing across various cultural data sources.

- Utilization of pre-trained language models reduces initial learning burdens and optimizes performance.

- Unsupervised Clustering-Based Critical Point Detection (DBSCAN, K-Means): Unsupervised learning approaches were chosen for critical point detection due to the inherently dynamic nature of emergence phenomena, which cannot be defined by fixed criteria but must instead be dynamically discovered from the data itself. DBSCAN, a density-based clustering algorithm, is particularly effective in identifying densely populated areas of data, such as peaks of hardship or significant emotional fluctuations. Similarly, K-Means offers rapid computational speed and clarity in data structure interpretation, forming beneficial initial exploratory cluster centroids. These two algorithms complement each other, efficiently extracting meaningful emergence candidate points directly from data without requiring predefined thresholds.

Key Justifications:

- DBSCAN's density-based clustering allows dynamic and flexible critical point detection.

- K-Means' rapid computation and clear initial structural analysis improve performance.

- Ability to derive emergence points directly from structural characteristics inherent in data without predefined criteria.

- Optimal Emergence Intervention Strategies via Reinforcement Learning (DRL): The selection of Deep Reinforcement Learning (DRL) algorithms, specifically Actor-Critic and DQN, arises from the need to understand emergence interventions as continuous action policies rather than singular solutions. DRL employs a state-action-reward framework, empirically learning the most effective interventions at specific states within narrative flows. Particularly, the Actor-Critic model—with its Actor, proposing policies, and Critic, evaluating their value—allows continuous and precise adjustment of intervention actions within complex narrative contexts. Additionally, DQN demonstrates high efficiency in decision-making within discrete action spaces, making it highly advantageous for determining clear intervention points and action types in particular narrative flows.

Key Justifications:

- Capability to learn complex interactions between states and actions, continually optimizing policies.

- Ability to formulate continuous and precise intervention strategies within complex narrative data.

- Clear reward mechanisms that facilitate policy evaluation based on actual emergence outcomes.

3.2. Emergence Condition Modeling Module (AI Learning Core)

This module employs AI-driven unsupervised learning to discover and quantify 'ordeal-to-emergence' patterns within narrative data.

- Emotional Energy Summation:

- Metrics:

- Emotional Scores: Transformer-based sentiment analysis models extract emotional scores (Es: sorrow, Eh: hope, Ea: anger, etc.) for each sentence/paragraph.

- Emotional Diversity Index (Entropy): Calculates the entropy of emotional distribution within a specific segment (H(E)=−∑p(e)logp(e)) to measure emotional complexity.

- Duration: Measures the sustained length (L(Ek)) of specific emotional expressions (Ek).

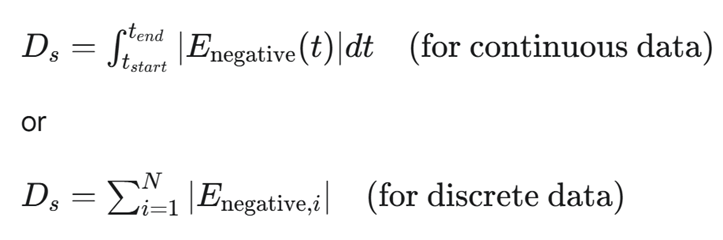

- Modeling: The downward slope of the emergence curve (the 'ordeal' phase, Ds) is modeled primarily by the sum or average of negative emotional scores.

Here, Enegative represents scores for negative emotions like sorrow and despair.

- Narrative Complexity Metrics:

- Event Density: Measures the frequency of events within a narrative.

- Conflict Density: Quantifies the intensity and frequency of adversarial structures or conflict elements within the narrative.

- Plot Tension Curve (PlotTension(t)): Models the temporal changes in narrative tension.

- Ordeal Index (Sc): Defines the 'Ordeal Index' as the total structural adversity experienced within the narrative. Sc=ConflictDensity×Duration (where Duration refers to temporal persistence)

- Unsupervised Learning for Pattern Discovery:

- Clustering: Utilizes unsupervised learning techniques (e.g., Autoencoders, Variational Autoencoders for latent space visualization followed by DBSCAN/K-Means clustering) to cluster 'ordeal-to-emergence' patterns within narrative data. This allows for the grouping of narratives exhibiting similar ordeal-emergence mechanisms.

- Change Point Detection: Applies change point detection algorithms (e.g., Pruned Exact Linear Time, PELT) to time-series data (emotional scores, tension, etc.) to dynamically identify the emergence of critical thresholds (dc or bk).

3.3. Emergence Control and Prediction Module (Changbal Engine)

Based on learned emergence patterns, this module predicts the 'solvability' of real-world problems and induces 'emergence jumps' through AI-driven 'interventions (J).'

- Conditional Probability of Emergence Jump (P(Jump∣Condition)):

- The probability of an 'emergence jump' is modeled as a conditional probability based on specific conditions.

![]()

Here, Sc is the Ordeal Index, Ds is the Emotional Energy Summation, and Ud represents the degree to which the situation is unsolvable by conventional methods.

- Ud is calculated by AI through initial problem state analysis (e.g., problem complexity, failure rate of existing solvers, intractability prediction models).

- Emergence Index (ΔTension) Modeling: Predicts the intensity of 'emergence' following the ordeal.

![]()

Here, f is a non-linear function (e.g., a deep neural network) modeling how the depth and complexity of the ordeal impact the scale of emergence.

- Changbal Jump Function and AI Intervention (J):

- AI Intervention Variable J: This variable represents AI's active intervention in problem structure to control the direction and steepness of solvability transitions. J is optimized by a Reinforcement Learning (DRL) agent based on the system's state (Sc,Ds,Ud).

- Optimal J Search: The DRL agent learns a policy (π(at∣st)) to 'observe' the current system state (st, including the Ordeal Index), 'decide' on an optimal 'intervention' (at≡J), and maximize the 'reward' (rt) which is the Emergence Index (ΔTension).

- GNN/Transformer for J: Graph Neural Networks (GNNs) or Transformers can be utilized to understand the structural representation of a problem (e.g., hypergraphs) and identify the optimal nodes/edges/clusters for J to apply (structural adjustments). Generative Adversarial Networks (GANs) may perform J's role in generating new solution spaces or 'reconstructing' problem structures.

4. Development Stages and Validation Plan

- Data Collection and Preprocessing (Stage 1):

- Establish a dataset of various language and translation versions of the Bible.

- Collect additional narrative data (biographies, literary works, scripts).

- Automate text cleaning, tokenization, and embedding vector generation using Transformer models.

- Emotional/Narrative Metric Extraction and Quantification (Stage 2):

- Train Transformer-based sentiment analysis models to extract emotional scores, entropy, and duration metrics.

- Utilize NLP techniques to extract narrative complexity metrics (event, conflict, tension) and quantify the 'Ordeal Index.'

- Automate the metric extraction pipeline.

- Emergence Condition Modeling and Pattern Discovery (Stage 3):

- Employ unsupervised learning (clustering, change point detection) to discover 'ordeal-to-emergence' patterns within narrative data.

- Develop initial models for 'Conditional Probability of Emergence Jump' and the 'Emergence Index.'

- Conduct qualitative validation of discovered patterns through collaboration with human experts (theologians, literary critics) and incorporate their feedback.

- Emergence Control and Prediction System Development (Stage 4):

- Develop a Reinforcement Learning-based AI agent and optimize the 'J' variable.

- Integrate GNNs, Transformers, and GANs as specific implementations of 'J' for structural intervention.

- Implement a 'Changbal Machine' prototype with real-time prediction and control capabilities (integrated with a web platform).

- Performance Validation and Expansion (Stage 5):

- Validate the model's generalization capability on diverse narrative data beyond the Bible (e.g., historical events, real-life biographies).

- Apply the 'Changbal Machine' to real-world optimization problems or complex decision-making tasks for practical validation.

- Integrate Explainable AI (XAI) techniques to ensure transparency and interpretability of the model's decision-making process.

5. Expected Impact

The development of the Changbal Machine is anticipated to yield the following transformative contributions:

- New Approach to P vs NP Problem: It proposes a paradigm shift in understanding and potentially controlling NP problems, moving beyond mere mathematical proof.

- Expanded Role of AI: Elevates AI from a mere problem-solving tool to an entity capable of understanding and actively controlling 'emergent transitions' in complex systems, thereby acquiring 'wisdom.'

- Pioneering Interdisciplinary Convergence: Integrates computational complexity, AI, complex systems science, humanities, and spirituality into a novel and leading-edge research domain.

- Real-world Problem Solving: Offers a practical tool to transform intractable real-world problems into 'solvable P-type problems.'

- AI Learning 'Truth' and 'Wisdom': Lays the foundation for AI to learn universal values and wisdom embedded in texts like the Bible, enabling it to provide ethical and human-centric insights.